We have a customer scenario where we are going to store larger files (blue prints) and we think that they are to big to store in the sql server itself trough the DbBinaryObjectManager.

Mostly to the increased storage cost and times it takes to read/save binary data into the db itself.

We are now looking into build a azure blob storage manager we can use in our asp.net zero project. Any tips on how to do that would be appriciated. Did anyone build you own azure blob storage manager that you can use in parallel with the built in DbBinaryObjectManager?

All help and tips are welcome.

3 Answer(s)

-

1

I recently added azure blob storage to my application, with container generation based on the tenancy name. The approach I took, in hindsight was probably overkill. But I went with a generic implementation to allow for additional storage options in the future. I created entities for ConfigurationProvider and ConfigurationProviderParameter - so in this example Provider would be "Azure Blob Storage" and Parameter holds entries for things like DefaultContainerName and ConnectionString. Then entities for ConfigurationEntry (IMayHaveTenant) and ConfigurationEntrySetting holding tenant-specific values for my provider parameters.

After that point I have abstract classes for my providers, and an implementation of that base class for MicrosoftAzureBlobStorageProvider, which handles the comms to/from azure. I should note I'm using Abp.Dependency.IIocResolver class to dynamically inject the class needed. The class name is storage in the ConfigurationProvider table, e.g. 'Xyz.Factory.Storage.Providers.MicrosoftAzureBlobStorageProvider, Xyz.Application'.

If you are not planning on switching between providers then you could greatly simplify this by going straight with AppSettings entries for your azure configuration

Upload references are stored in a separate table. So as to not continuously ping Azure, I save to my db table things like contentType, fileSize, directUrl to the file (assuming it's in a public container), ETAG, and some basic metadata (dimensions for images, for example).

The nice thing with Azure is if your containers are public, you can save the path to the file and serve it directly to a user to save on your bandwidth. If you are working with blueprints you may need a little additional security, in which case you can mark your container as private, still store the direct path to the file on your end, and use the azure API to essentially stream the file to your server and then spit it back out to the end user. That way you can be sure files are protected against unauthorized access to anyone knowing the url.

hope that helps!

-

0

Hi webking

This should get you started:

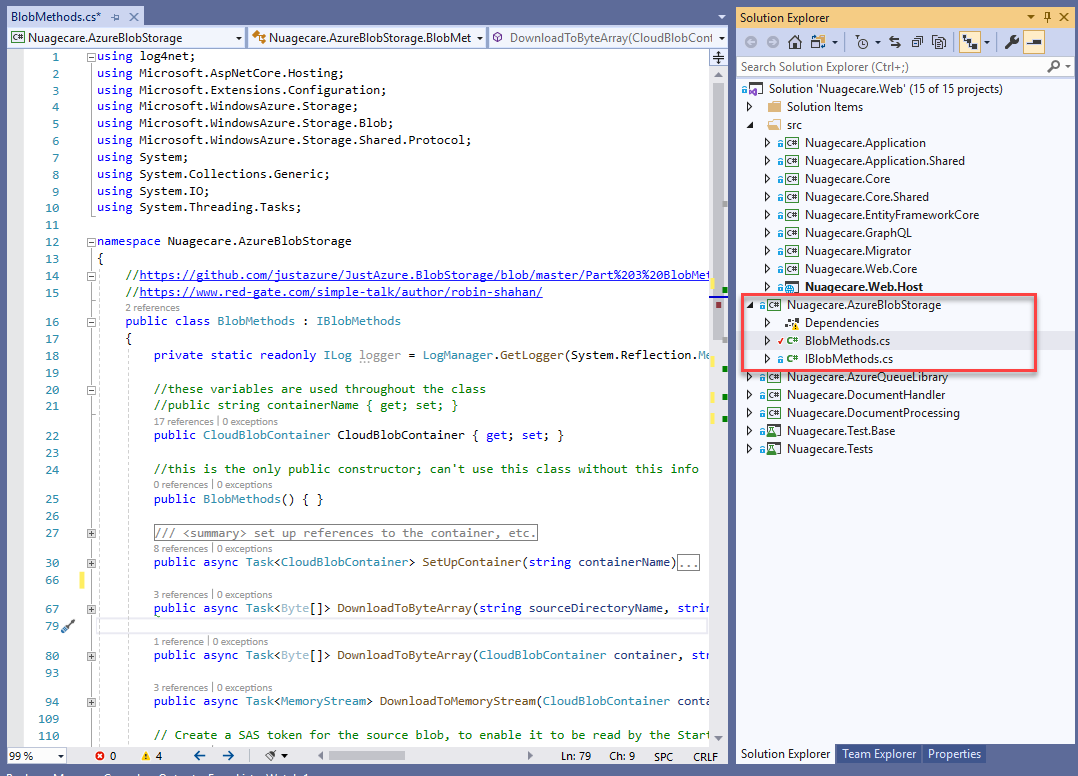

using log4net; using Microsoft.AspNetCore.Hosting; using Microsoft.Extensions.Configuration; using Microsoft.WindowsAzure.Storage; using Microsoft.WindowsAzure.Storage.Blob; using Microsoft.WindowsAzure.Storage.Shared.Protocol; using System; using System.Collections.Generic; using System.IO; using System.Threading.Tasks; namespace Nuagecare.AzureBlobStorage { //https://github.com/justazure/JustAzure.BlobStorage/blob/master/Part%203%20BlobMethods/BlobMethods/BlobMethods.cs //https://www.red-gate.com/simple-talk/author/robin-shahan/ public class BlobMethods : IBlobMethods { private static readonly ILog logger = LogManager.GetLogger(System.Reflection.MethodBase.GetCurrentMethod().DeclaringType); //these variables are used throughout the class public CloudBlobContainer CloudBlobContainer { get; set; } //this is the only public constructor; can't use this class without this info public BlobMethods() { } ////// set up references to the container, etc. /// public async Task SetUpContainer(string containerName) { var env = AppDomain.CurrentDomain.GetData("HostingEnvironment") as IHostingEnvironment; string currentDir = Directory.GetCurrentDirectory(); var builder = new ConfigurationBuilder() .SetBasePath(currentDir) .AddJsonFile("appsettings.json", optional: false, reloadOnChange: true) .AddJsonFile($"appsettings.{env.EnvironmentName}.json", optional: true); var configuration = builder.Build(); string connectionString = configuration.GetConnectionString("AzureStorage"); //get a reference to the container where you want to put the files CloudStorageAccount cloudStorageAccount = CloudStorageAccount.Parse(connectionString); CloudBlobClient cloudBlobClient = cloudStorageAccount.CreateCloudBlobClient(); await cloudBlobClient.SetServicePropertiesAsync(new ServiceProperties() { DefaultServiceVersion = "2018-03-28" }); CloudBlobContainer = cloudBlobClient.GetContainerReference(containerName); if (await CloudBlobContainer.CreateIfNotExistsAsync()) { await CloudBlobContainer.SetPermissionsAsync( new BlobContainerPermissions { //set access level to "blob", which means user can access the blob but not look through the whole container //this means the user must have a URL to the blob to access it, given the GUID of the resident iis in the path it's almost as good as an SAS but doesn't expire //the alternative is to create an SAS and passing it down to angular so it can be called in the MS widget PublicAccess = BlobContainerPublicAccessType.Blob //for test } ); } return CloudBlobContainer; } public async Task DownloadToByteArray(string sourceDirectoryName, string fileName) { CloudBlobDirectory sourceCloudBlobDirectory = CloudBlobContainer.GetDirectoryReference(sourceDirectoryName); CloudBlockBlob blob = sourceCloudBlobDirectory.GetBlockBlobReference(fileName); //you have to fetch the attributes to read the length await blob.FetchAttributesAsync(); long fileByteLength = blob.Properties.Length; Byte[] myByteArray = new Byte[fileByteLength]; await blob.DownloadToByteArrayAsync(myByteArray, 0); return myByteArray; } public async Task DownloadToByteArray(CloudBlobContainer container, string sourceDirectoryName, string fileName) { CloudBlobDirectory sourceCloudBlobDirectory = container.GetDirectoryReference(sourceDirectoryName); CloudBlockBlob blob = sourceCloudBlobDirectory.GetBlockBlobReference(fileName); //you have to fetch the attributes to read the length await blob.FetchAttributesAsync(); long fileByteLength = blob.Properties.Length; Byte[] myByteArray = new Byte[fileByteLength]; await blob.DownloadToByteArrayAsync(myByteArray, 0); return myByteArray; } public async Task DownloadToMemoryStream(CloudBlobContainer container, string Uri) { var blobName = new CloudBlockBlob(new Uri(Uri)).Name; CloudBlockBlob blob = container.GetBlockBlobReference(blobName); MemoryStream stream = new MemoryStream(); using (var ms = new MemoryStream()) { await blob.DownloadToStreamAsync(ms); ms.Position = 0; ms.CopyTo(stream); stream.Position = 0; return stream; } } // Create a SAS token for the source blob, to enable it to be read by the StartCopyAsync method private static string GetSharedAccessUri(string blobName, CloudBlobContainer container) { DateTime toDateTime = DateTime.Now.AddMinutes(60); SharedAccessBlobPolicy policy = new SharedAccessBlobPolicy { Permissions = SharedAccessBlobPermissions.Read, SharedAccessStartTime = null, SharedAccessExpiryTime = new DateTimeOffset(toDateTime) }; CloudBlockBlob blob = container.GetBlockBlobReference(blobName); string sas = blob.GetSharedAccessSignature(policy); return blob.Uri.AbsoluteUri + sas; } public async Task CreateSnapshot(string targetFileName) { CloudBlockBlob blob = CloudBlobContainer.GetBlockBlobReference(targetFileName); blob.Metadata["NcDocumentHistoryId"] = ""; await blob.CreateSnapshotAsync(); return blob.SnapshotQualifiedStorageUri.PrimaryUri.ToString(); } public async Task UploadFromByteArray(Byte[] uploadBytes, string targetFileName, string blobContentType) { CloudBlockBlob blob = CloudBlobContainer.GetBlockBlobReference(targetFileName); blob.Properties.ContentType = blobContentType; await blob.UploadFromByteArrayAsync(uploadBytes, 0, uploadBytes.Length); blob.Properties.CacheControl = "no-cache"; await blob.SetPropertiesAsync(); return blob; } public async Task UploadFromByteArray(Byte[] uploadBytes, string targetFileName, string blobContentType, IDictionary MetaData, string cacheControl) { CloudBlockBlob blob = CloudBlobContainer.GetBlockBlobReference(targetFileName); blob.Properties.ContentType = blobContentType; foreach (var metadata in MetaData) { blob.Metadata[metadata.Key] = metadata.Value; } await blob.UploadFromByteArrayAsync(uploadBytes, 0, uploadBytes.Length); blob.Properties.CacheControl = cacheControl; if (cacheControl == "no-cache") { await blob.SetStandardBlobTierAsync(StandardBlobTier.Hot); } else { await blob.SetStandardBlobTierAsync(StandardBlobTier.Cool); } await blob.SetPropertiesAsync(); return blob; } public async Task UpdateMetadata(CloudBlockBlob blob, long NcMediaId) { blob.Metadata["NcMediaId"] = NcMediaId.ToString(); await blob.SetMetadataAsync(); return; } public async Task BlobExists(string sourceFolderName, string fileName) { CloudBlobDirectory sourceCloudBlobDirectory = CloudBlobContainer.GetDirectoryReference(sourceFolderName); return await sourceCloudBlobDirectory.GetBlockBlobReference(fileName).ExistsAsync(); } } }Create a separate project away from your Zero project. Create the interface to go with it:

Add the project as a dependency in your [ProjectName].Application project.

Inject into your [ProjectName].Application.[ProjectName]ApplicationModule:Configuration.ReplaceService(typeof(IBlobMethods), () => { IocManager.IocContainer.Register( Component.For() .ImplementedBy() .LifestyleTransient() ); });and then invoke and call from your app service as below:

public async Task UploadDocument(IFormFile files, string documentFolder) { var URL = ""; //https://developer.telerik.com/products/kendo-ui/file-uploads-azure-asp-angular/ // Connect to Azure var tenancyName = await GetTenancyNameFromTenantId(); var container = await _blobMethods.SetUpContainer(tenancyName); _blobMethods.CloudBlobContainer = container; if (files.Length > 0) { //process file using (var ms = new MemoryStream()) { var FileName = ContentDispositionHeaderValue.Parse(files.ContentDisposition).FileName.Replace("\"", ""); //take out the timestamp var Timestamp = FindTextBetween(FileName, "[", "]"); FileName = FileName.Replace("[" + Timestamp + "]", ""); var FileNameWithoutExtension = Path.GetFileNameWithoutExtension(FileName); var Extension = Path.GetExtension(FileName); //put the timestamp back in FileName = documentFolder + "/" + FileNameWithoutExtension + "[" + GetTimestamp() + "]" + Extension; var DisplayString = L("DocumentUploadedBy", _userRepository.FirstOrDefault((long)AbpSession.UserId).FullName, Clock.Now.ToLocalTime().ToString("ddd d MMM yyyy H:mm")); var MediaType = files.ContentType; files.CopyTo(ms); var fileBytes = ms.ToArray(); // post to BlobStorage var metaData = new Dictionary(); metaData.Add("MediaType", MediaType); metaData.Add("TenantId", AbpSession.TenantId.ToString()); metaData.Add("UserId", AbpSession.UserId.ToString()); metaData.Add("DisplayString", DisplayString); metaData.Add("DocumentFolder", documentFolder); var blob = await _blobMethods.UploadFromByteArray(fileBytes, FileName, MediaType, metaData, "no-cache"); // Get a reference to a blob CloudBlockBlob blockBlob = container.GetBlockBlobReference(files.FileName); URL = blob.Uri.ToString(); } } return URL; }I hope that gives you a head start!

BTW - I'm fairly crap at this coding malarkey so if you can find a better way of doing things, sweet.

I would also advise you to try and abstract away from Zero if at all possible when working with blob storage. For heavy loading and copying from container to container I put a message on an Azure queue and then have a function app triggered by the queue to copy and merge documents when I create a new tenant. That may not be possible in your case but just be careful with CPU and threading when you're using blob storage. It can be a hungry beast.

Cheers -

0

Thanks a lot @rucksackdigital , @bobingham :).