Activities of "leonkosak"

I have a little specific update scenario. In database table, we have to update entity - just one property. But the problem is that each record has relatively large binary object (varbinary - few megabytes at least). There is issue in Azure environment when only one property of such entity has to be updated but we always get error when calling _repository.GetById(id). (There is no issue if we read this binary object inside entity, write it to temp folder location so that client application gets FileDto object to download it).

How can we implement such update scenario? Thank you for suggestions.

That's all true, but as I can see ABP.IO (and paid components) is focused on upgradability. Besides that, Volosoft has now dedicated designer and ABP.IO-based solutions will have it's own theme (also greater support for theming). Let's face it - Metronic is a big messy container of open-source libraries and components with awful documentation and bad support. Each major version (after 1.5-2 years) is a complete rewrite and after 5 iterations I cannot understand that kind of development in Keenthemes team. Most of us are using dedicated components (like DevExtreme) and we don't care about PrimeNG and other stuff. Maybe sweetalert and some other "easy components" (alerts, buttons,...), but personally, I use DevExtreme for all components in my applications. I would prefer that ABP.IO themes would have "options" that would "visually integrate" with DevExtreme (and other component suites - Telerik,...).

We found samo interesting.

If a file is sent directly as base64 string in JSON (from .Application project), then it took much more time if we run the same SQL query, then create a file in temp folder with this binary data (and return FileDto object with a token) and then client application call .downloadTempFile(fileDto) method.

In both situations, the SQL query took the same time, but response time was much different.

I also tried described scenario in a different environment and the results (time difference) was also quite big.

Why is sending a base64 string of file via .Application API so much slower?

Ok. Thank you. That also explains why insert speed are so well (compared to read)?

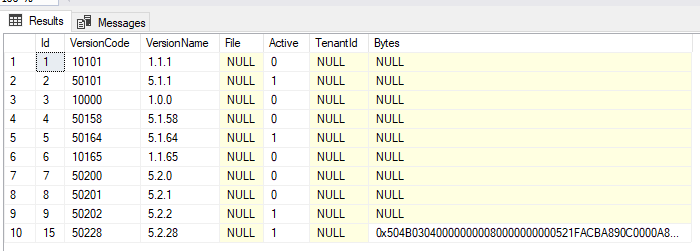

10 rows and only one has binary data.

[HttpGet]

public async Task<FileDto> GetMobileApk(int versionCode = 0)

{

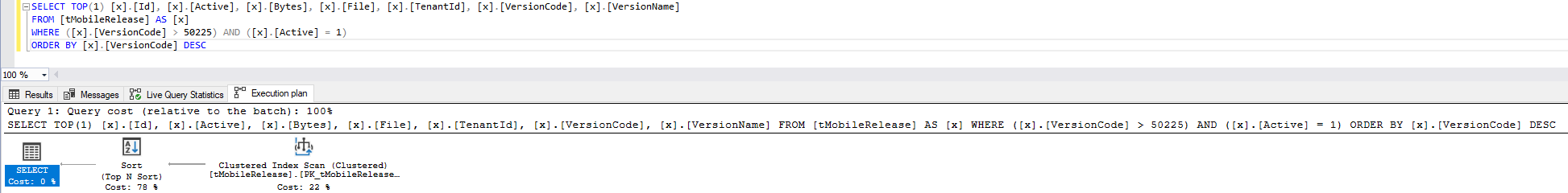

var release = _mobileReleaseRepository.GetAll()

.Where(x => x.VersionCode > versionCode && x.Active)

.OrderByDescending(x => x.VersionCode)

.Select(x => new { x.Id, x.Active, x.File, x.TenantId, x.VersionCode, x.VersionName });

//var id = await release.Select(x => x.Id).FirstOrDefaultAsync();

var lastFile = await release.FirstOrDefaultAsync(); //await _mobileReleaseRepository.GetAsync(id);

var now = DateTime.Now;

var filename = $"Mobile_Tralala.apk";

var file = new FileDto(filename, null);

var filePath = Path.Combine("C:\\Path", file.FileToken);

File.WriteAllBytes(filePath, new byte[9000000]);//File.WriteAllBytes(filePath, lastFile.Bytes);

return file;

}

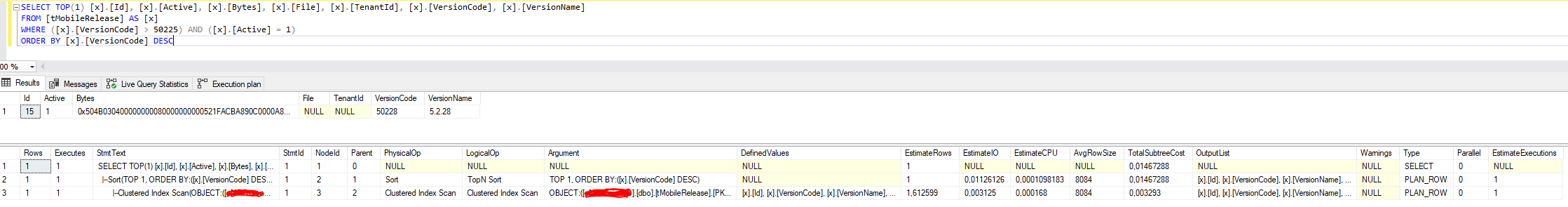

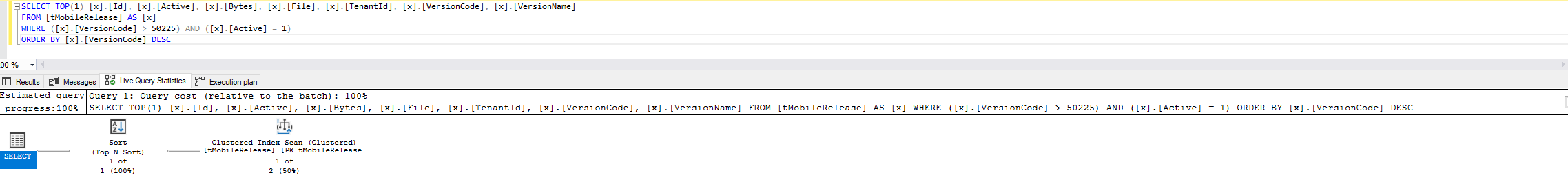

Excluding binary data (.Select) greatly improves Execution duration (50-120ms and 120-800ms in Postman) even if aprox 9MB array of bytes is added in file (look code below).

Even if from first query is only Id selected and then passed in GetAsync method, the duration times are the same as before.

I found something useful.

[HttpGet]

public async Task<FileDto> GetMobileApk(int versionCode = 0)

{

var release = _mobileReleaseRepository.GetAll()

.Where(x => x.VersionCode > versionCode && x.Active)

.OrderByDescending(x => x.VersionCode);

var lastFile = await release.FirstOrDefaultAsync();

var now = DateTime.Now;

var filename = $"Mobile_Tralala.apk";

var file = new FileDto(filename, null);

var filePath = Path.Combine("C:\\Path", file.FileToken);

File.WriteAllBytes(filePath, lastFile.Bytes);

return file;

}

The funny part is that now ExecutionDuration time (AbpAuditLogs table) and the time when result is displayed in Postman are almost identical. I can see generated file and is identical that it has to be. Overall execution time (in postman) is basically a little more than SQL query time.

Results:

118MB file: Insert: 2.8s Read: not completed in minutes

23MB file: Insert: 1.2s Read: 25s

9MB file: Insert: 270ms Read: 4s

I don't know what happens (inside API?) that if base64 string (or bytes) are returned that takes 1 minute + for 9MB file, if execution time in AbpAuditLogs is around 6-7 seconds.

I don't know why reading is so much slower than inserting in SQL.

As we can see, that reading bytes from SQL to temp file (creating temp file) is much faster than returning base64 string or bytes in the same controller (app service). I cannot explain that? Maybe disposing on API before client request can be finished?

There is also no difference (execution time, stopwatch time,...) if there is no conversion (byte[] => base64string) in AutoMapper. In postman I can see that we gat base64 representation of bytes.

Response also takes more than a minute (using stopwatch on my phone) if I call method from swagger (ExecutionDuration in AbpAuditLogs is roughly the same as when calling from Postman).

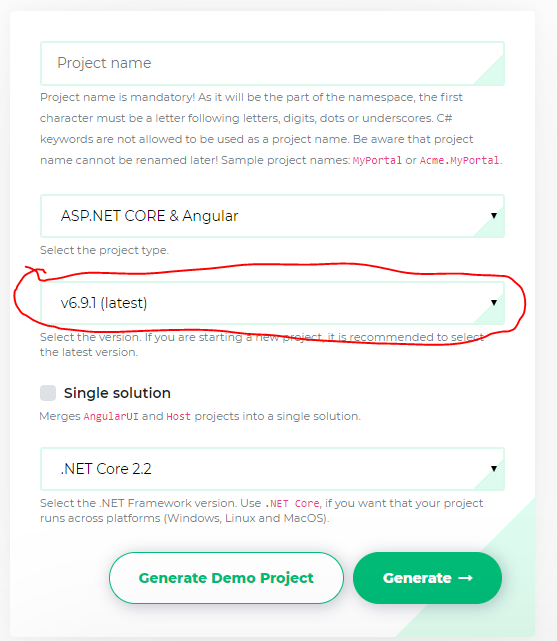

I have there warings too (also v6.9.1), but not error. That's strange.