Situation:

There is varbinary column in database table and inserting (uploading file) is really fast (9MB), but when reading only one record, the response is very slow.

There is aprox. 9MB file in varbinary column but it takes 60-70 seconds in our local environment that postman returns response. in AuditLogs, the time is aprox. 6-7 seconds (which is still a lot).

I donn't know why there is so much difference between ecetution time in AuditLogs table and in reality.

I cannot explain either why inserting is so much faster.

AppService

[HttpGet]

public async Task<MobileReleaseListDto> GetMobileRelease(int versionCode = 0)

{

//var release = from mr in _mobileReleaseRepository.GetAll()

// where mr.VersionCode > versionCode && mr.Active

// orderby mr.VersionCode descending

// select mr;

var release = _mobileReleaseRepository.GetAll()

.Where(x => x.VersionCode > versionCode && x.Active)

.OrderByDescending(x => x.VersionCode);

var lastFile = await release.FirstOrDefaultAsync();

if (lastFile != null)

{

return ObjectMapper.Map<MobileReleaseListDto>(lastFile);

}

else

return new MobileReleaseListDto();

}

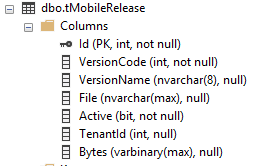

Database table:

[Table("tMobileRelease")]

public class MobileReleaseModel : Entity

{

public const int MaxVersionNameLength = 8;

[Required]

[Key]

[DatabaseGenerated(DatabaseGeneratedOption.Identity)]

public override int Id { get; set; }

public int VersionCode { get; set; }

[StringLength(MaxVersionNameLength)]

public string VersionName { get; set; }

public string File { get; set; }

public bool Active { get; set; }

public int? TenantId { get; set; }

public byte[] Bytes { get; set; }

}

Our local environment is pretty powerful and that is not the problem. In Azure, we cannot get response after 500+ seconds.

Thank you for suggestions.

17 Answer(s)

-

0

Hi Leon,

This sounds like one for investigation in SQL Server. Output the SQL, open SSMS and run the query with "Query" -> "Include Actual Execution Plan". That should give you some clues. You might want to consider putting the varbinary value (9MB - that's not good for SQL) in Azure table storage and using a cross-reference in your SQL table or vice-versa. -

0

We will definitely run SQL profiler, but we suspect that this issue is not SQL related. We saw this problem in two different environments (different customers and development environments). Even 2MB file is a poblem (multiple seconds execution time in AbpAuditLogs).

-

0

@leonkosak

If you can check the query time on the DB (using profiler), I would help us to understand the problem a bit more.

-

0

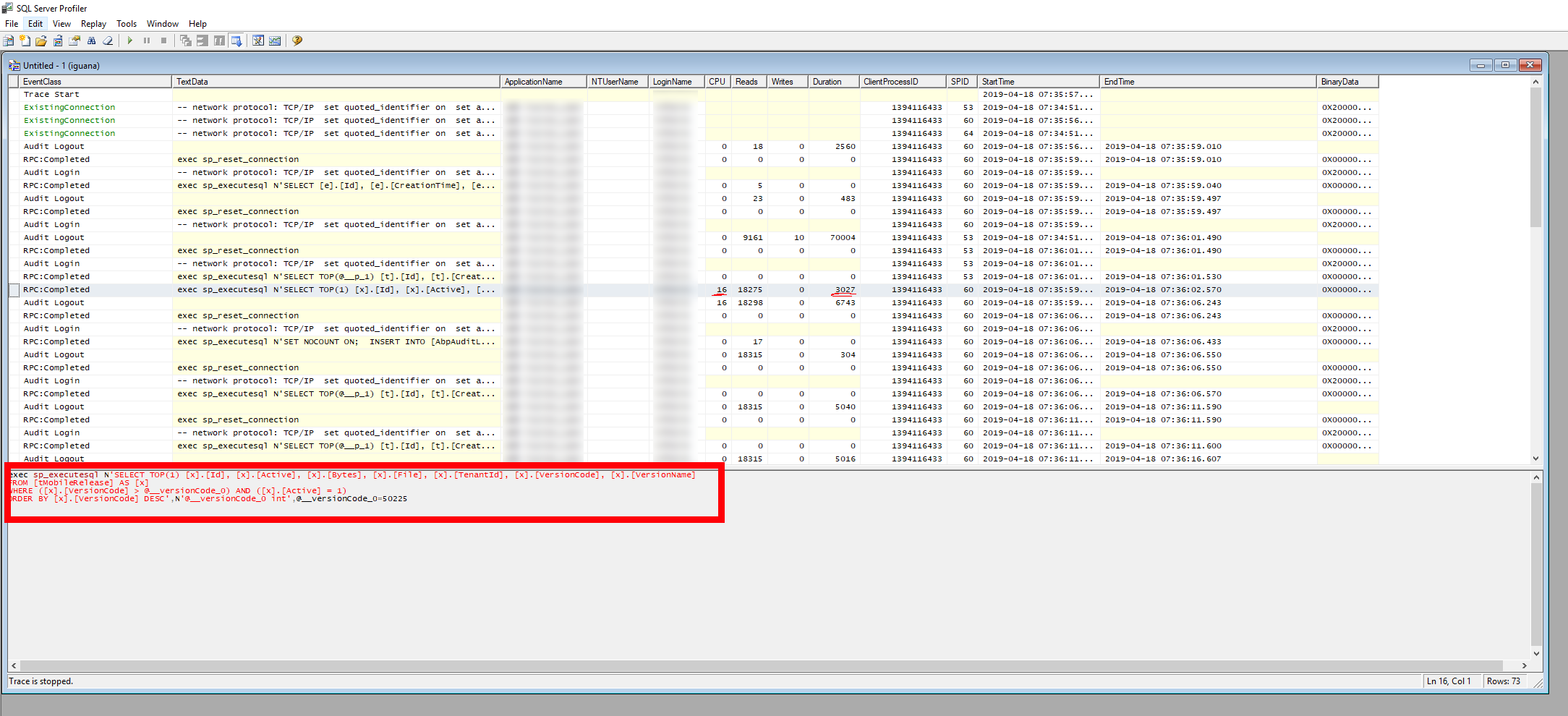

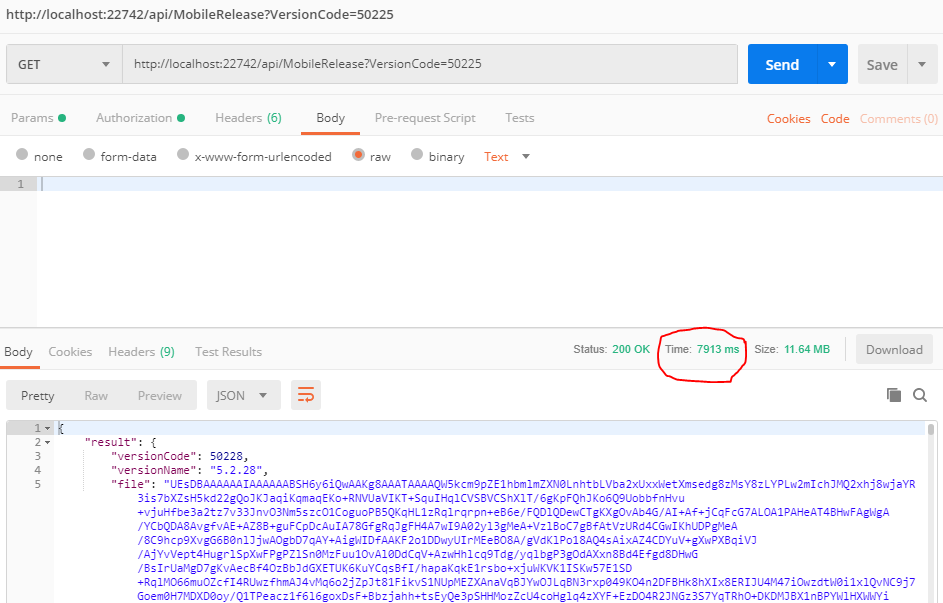

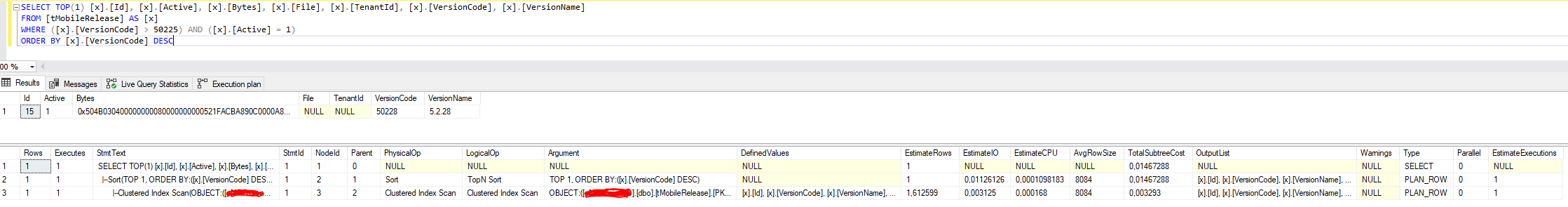

The "Audit Logout" below selected (also CPU: 16, Reads: 18298, Duration: 6743) query is empty.

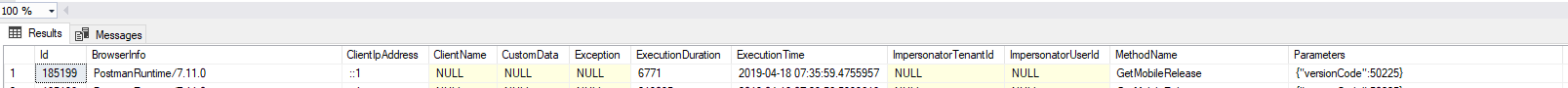

In AbpAuditLogs table

MSSQL 2016 on relatively powerful hardware (virtual machine is basically not loaded with SQL requests at all).

This times and are for aprox 9MB .apk file

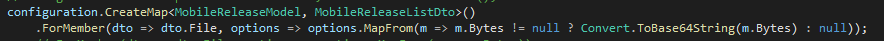

Mapping in AutoMapper (in AppService)

But it takes aprox. 68 seconds that Postman shows result (goes "out" of Loading text).I saw the similar issues storing pdf and image files (jpg, png) with max size 2MB (other customer, SQL 2017 I think, also not loaded virtual machine) - request takes multiple seconds from start to response.

Also simple SQL query, without additional processing (rather than converting received file to byte array) inside API.Thank you for suggestions.

-

0

Response also takes more than a minute (using stopwatch on my phone) if I call method from swagger (ExecutionDuration in AbpAuditLogs is roughly the same as when calling from Postman).

-

0

There is also no difference (execution time, stopwatch time,...) if there is no conversion (byte[] => base64string) in AutoMapper.

In postman I can see that we gat base64 representation of bytes. -

0

@leonkosak

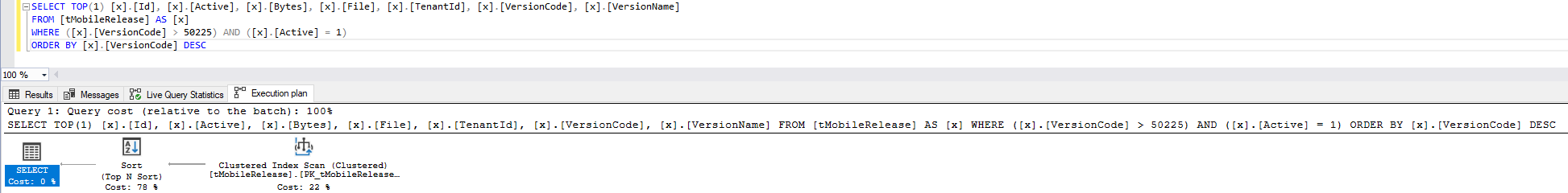

You can follow the steps in this document to generate the results, then share the screenshots? -

0

I don't think the execution plan will tell you anything. I think you will find this is down to how SQL Server stores data internally. NBINARY(MAX) can be stored in-row but it is handled separately by the storage engine because it can be pushed off-row. When off-row it is a LOB_DATA allocation unit, rather than ROW_OVERFLOW_DATA allocation unit and this carries an overhead.

I don't know how much of a show-stopper this is for you because I don't know your system but I would recommend you take the Id column and the Bytes column and put them in Azure's blob storage. You can then call blob storage directly from the client which will remove any API bottleneck. In addition you may want to consider running the query directly on the SQL Server box, this could also have bandwidth issues. -

0

I found something useful.

[HttpGet] public async Task GetMobileApk(int versionCode = 0) { var release = _mobileReleaseRepository.GetAll() .Where(x => x.VersionCode > versionCode && x.Active) .OrderByDescending(x => x.VersionCode); var lastFile = await release.FirstOrDefaultAsync(); var now = DateTime.Now; var filename = $"Mobile_Tralala.apk"; var file = new FileDto(filename, null); var filePath = Path.Combine("C:\\Path", file.FileToken); File.WriteAllBytes(filePath, lastFile.Bytes); return file; }The funny part is that now ExecutionDuration time (AbpAuditLogs table) and the time when result is displayed in Postman are almost identical. I can see generated file and is identical that it has to be.

Overall execution time (in postman) is basically a little more than SQL query time.Results:

118MB file:

Insert: 2.8s

Read: not completed in minutes23MB file:

Insert: 1.2s

Read: 25s9MB file:

Insert: 270ms

Read: 4s

-

I don't know what happens (inside API?) that if base64 string (or bytes) are returned that takes 1 minute + for 9MB file, if execution time in AbpAuditLogs is around 6-7 seconds.

-

I don't know why reading is so much slower than inserting in SQL.

-

As we can see, that reading bytes from SQL to temp file (creating temp file) is much faster than returning base64 string or bytes in the same controller (app service). I cannot explain that? Maybe disposing on API before client request can be finished?

-

-

0

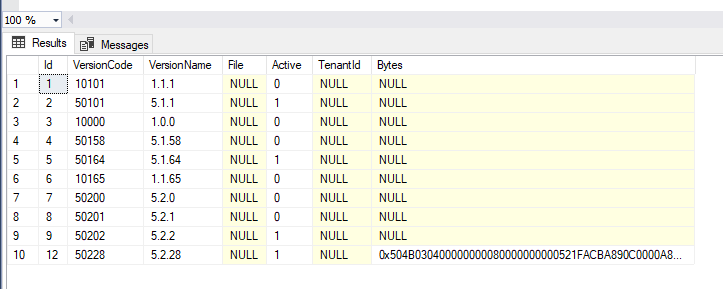

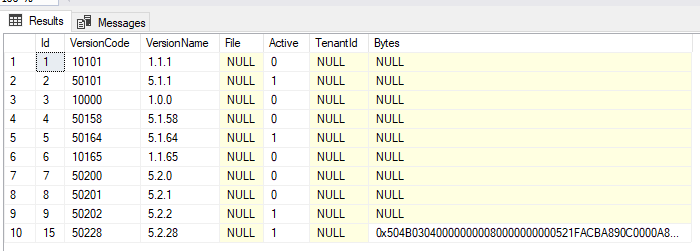

How many rows of data does your table have?

You can try the following:

-

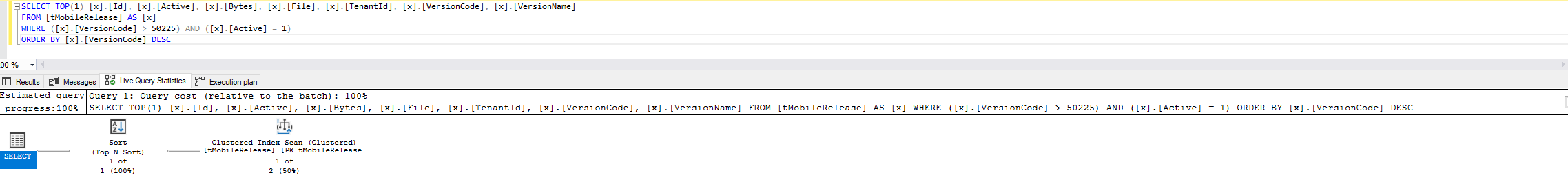

Exclude binary data (Bytes) in the query results, see how fast (select id,... from table where versioncode > 50225 and active = 1).

-

If the above method is very fast, you can use the above method to query the ID, and then query the single data according to the ID, to see how fast. (select bytes from table where id = 1)

-

-

0

10 rows and only one has binary data.

[HttpGet] public async Task GetMobileApk(int versionCode = 0) { var release = _mobileReleaseRepository.GetAll() .Where(x => x.VersionCode > versionCode && x.Active) .OrderByDescending(x => x.VersionCode) .Select(x => new { x.Id, x.Active, x.File, x.TenantId, x.VersionCode, x.VersionName }); //var id = await release.Select(x => x.Id).FirstOrDefaultAsync(); var lastFile = await release.FirstOrDefaultAsync(); //await _mobileReleaseRepository.GetAsync(id); var now = DateTime.Now; var filename = $"Mobile_Tralala.apk"; var file = new FileDto(filename, null); var filePath = Path.Combine("C:\\Path", file.FileToken); File.WriteAllBytes(filePath, new byte[9000000]);//File.WriteAllBytes(filePath, lastFile.Bytes); return file; }-

Excluding binary data (.Select) greatly improves Execution duration (50-120ms and 120-800ms in Postman) even if aprox 9MB array of bytes is added in file (look code below).

-

Even if from first query is only Id selected and then passed in GetAsync method, the duration times are the same as before.

-

-

0

hi

I think the reason for the slow speed may be that sql server reads the binary file from disk and sends it to the application through the data packet.

You can write a console application and then read it using ADO.NET, I believe the speed is as slow.

About sqlserver to read the varbinary type of data solution, you can search on google according to their actual situation.

-

0

Ok. Thank you.

That also explains why insert speed are so well (compared to read)? -

0

You could be suffering from internal or external fragmentation. External where your .mdf data file is stored on your disk in several fragments and internally within your .mdf file your Bytes column could also be fragmented. It seems this could be your problem because your profiler image shows over 18,000 read operations necessary to complete your query. You could consider storing the tMobileRelease table on a seperate filegroup in SQL Server which would give some benefits but my advice would be to remove the Bytes column from SQL Server altogether.

-

0

We found samo interesting.

If a file is sent directly as base64 string in JSON (from .Application project), then it took much more time if we run the same SQL query, then create a file in temp folder with this binary data (and return FileDto object with a token) and then client application call .downloadTempFile(fileDto) method.

In both situations, the SQL query took the same time, but response time was much different.

I also tried described scenario in a different environment and the results (time difference) was also quite big.

Why is sending a base64 string of file via .Application API so much slower?

-

0

There's a performance issue while reading large file as

asyncinEntity Framework Core.

The issue is still open in theEF Corerepo.

So better to usesyncversions of theEF Corefetch methods for large binaries.

See the related issues; -

0

Holy crap!

Even for small binaries, the loading times are not acceptable soon.