Activities of "sedulen"

@ismcagdas ,

To follow up on my post from last month, I have resolved the issue by reviewing & refactoring my AsyncBackgroundJob code.

I was still using a legacy implementation that called .Execute , which wrapped calling .ExecuteAsync in AsyncHelper.RunSync

Now my background jobs call .ExecuteAsync directly and Hangfire handles the await implementation natively.

This issue has not occurred since deploying that update to production.

Cheers! -Brian

@mayankm,

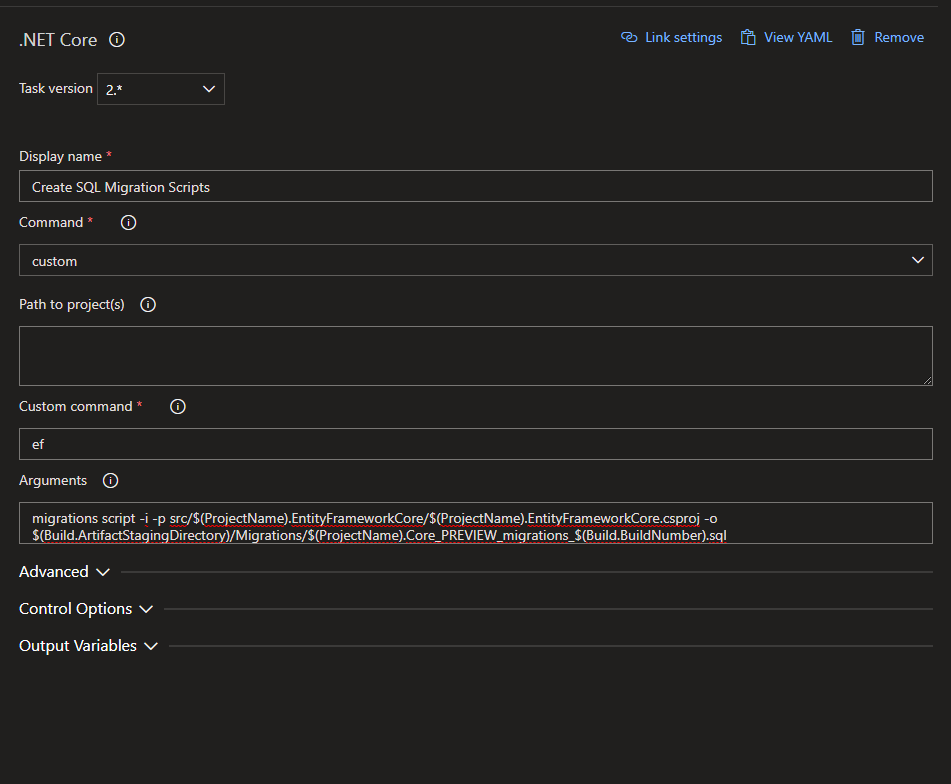

I am able to successfully generate EF migration scripts. However, I am using a different Task in my Azure DevOps pipeline. I am using the .NET Core task, with a command of custom

command: custom custom command: ef arguments: migrations script -i -p src/$(ProjectName).EntityFrameworkCore/$(ProjectName).EntityFrameworkCore.csproj -o $(Build.ArtifactStagingDirectory)/Migrations/$(ProjectName).Core_migrations_$(Build.BuildNumber).sql

I like variables in my pipelines, so I have the ProjectName as a variable.

once that task completes, I have 2 subsequent Azure DevOps tasks in my pipeline for "Copy SQL files into Sql Artifact" and "Publish Sql Artifact".

"Copy SQL files into Sql Artifact" is a Copy Files task

source folder: sql contents: ****** target folder: $(Build.ArtifactStagingDirectory)/Migrations/

"Publish Sql Artifact" is a Publish Build Artifacts task

path to publish: $(Build.ArtifactStagingDirectory)/Migrations/ artifact name: SQL artifact publish location: Azure Pipelines

let me know if that works for you, -Brian

Hi @dschnitt,

While I'm on an older version of ANZ and not running 10.2, I had looked into doing something like this a year ago for the AbpAuditLogs table, as well as another table that I have defined.

While I never ended up taking this to production, I built a proof-of-concept that used AmbientDataContext

I had to do a couple of things:

- for the tables that I wanted to move, I had to create concrete repository classes for

- I had to define my own ConnectionStringResolver

Here is my concrete repository class

using Abp.Auditing;

using Abp.EntityFrameworkCore;

using Abp.Runtime;

using Abp.Domain.Repositories;

using Microsoft.Extensions.Logging;

using Brian.EntityFrameworkCore.Repositories;

using Brian.EntityFrameworkCore;

namespace Brian.MultiTenancy.Auditing

{

public class AbpAuditLogsRepository : BrianRepositoryBase<AuditLog, long>, IRepository<AuditLog, long>

{

private readonly ILogger<AbpAuditLogsRepository> _logger;

public AbpAuditLogsRepository(IDbContextProvider<BrianDbContext> dbContextProvider, IAmbientDataContext ambientDataContext, ILogger<AbpAuditLogsRepository> logger)

: base(dbContextProvider)

{

_logger = logger;

_logger.LogDebug("[AbpAuditLogsRepository] : setting AmbientDataContext 'DBCONTEXT' to 'AuditLog'");

ambientDataContext.SetData("DBCONTEXT", "AuditLog");

}

}

}

here is my ConnectionStringResolver

using System;

using Abp.Configuration.Startup;

using Abp.Domain.Uow;

using Microsoft.Extensions.Configuration;

using Brian.Configuration;

using Abp.Reflection.Extensions;

using Abp.Zero.EntityFrameworkCore;

using Abp.MultiTenancy;

using Abp.Runtime;

using Microsoft.Extensions.Logging;

namespace Brian.EntityFrameworkCore

{

public class BrianDbConnectionStringResolver : DbPerTenantConnectionStringResolver

{

private readonly IConfigurationRoot _appConfiguration;

private readonly ILogger<BrianDbConnectionStringResolver> _logger;

private readonly IAmbientDataContext _ambientDataContext;

public BrianDbConnectionStringResolver(IAbpStartupConfiguration configuration,

ICurrentUnitOfWorkProvider currentUnitOfWorkProvider,

ITenantCache tenantCache,

IAppConfigurationAccessor configurationAccessor,

IAmbientDataContext ambientDataContext,

ILogger<BrianDbConnectionStringResolver> logger)

: base(configuration, currentUnitOfWorkProvider, tenantCache)

{

_ambientDataContext = ambientDataContext;

_appConfiguration = configurationAccessor.Configuration;

_logger = logger;

}

public override string GetNameOrConnectionString(ConnectionStringResolveArgs args)

{

var s = base.GetNameOrConnectionString(args);

object dbContext = null;

try

{

dbContext = _ambientDataContext.GetData("DBCONTEXT");

if(dbContext != null && dbContext.GetType().Equals(typeof(string)))

{

var context = (string)dbContext;

_logger.LogDebug($"[BrianDbConnectionStringResolver.GetNameOrConnectionString] : Found 'DBCONTEXT' of '{context}'");

//how do we ensure that there _is_ a connectionString defined for the given Context

var connectionString = _appConfiguration.GetConnectionString(context);

if(!string.IsNullOrEmpty(connectionString))

{

_logger.LogDebug($"[BrianDbConnectionStringResolver.GetNameOrConnectionString] : Found connectionString defined for 'DBCONTEXT' of '{context}'");

s = connectionString;

}

}

}

catch (Exception ex)

{

//can we log this

_logger.LogError(ex, "[BrianDbConnectionStringResolver.GetNameOrConnectionString] : An unexpected error has occurred.");

}

return s;

}

}

}

Then in my WebCoreModule, in the PreInitialize method:

public override void PreInitialize()

{

Configuration.ReplaceService<IConnectionStringResolver, BrianDbConnectionStringResolver>();

Configuration.ReplaceService<IRepository<AuditLog, long>, AbpAuditLogsRepository>();

...

Disclaimer: this code was written against a much older version of ABP & ANZ, and written in just a few hours as part of a rapid proof-of-concept just to see if this was even feasible. It's definitely not my cleanest work, and it was never code reviewed or tested for production readiness. Additionally, I never looked into how this would be handled in for data migrations within EntityFrameworkCore, so it's possible that doing this could cause issues running the .Migrator project or running your EF migrations either code-first or via SQL scripts.

I don't know if ABP / ANZ still supports or recommends using DbPerTenantConnectionStringResolver or IAmbientDataContext.

I hope this helps. Good luck! -Brian

Hi @ismcagdas ,

Thank you for the reply. Unfortunately, it's not possible to share the source code.

The explanation of the code is that the args.RequestId is a record in a table. There is a second table that identifies the list of documents to be zip'd up as part of this request. So the BuildZipFileAsync method takes the requestId, gets the list of documents to be zip'd up for this request, and then iterates over the list.

For each document, get the Stream of the document from our storage provider (Azure Blob Storage) and copy the stream into the zipfile as a new entry.

The method also compiles a "readme.txt" that lists all of the documents and some additional metadata about each file.

Ultimately that zipfile is retained as another Stream, which is sent back to our storage provider for persistent storage, and then the id of that new file is what is passed on to the notification so that the user notification can reference the zipfile.

The only additional code that I have in place is for handling the streams. Since these zipfiles could contain hundreds of files, I didn't want to deal with potential memory pressure issues. So try to never hold onto a file as a MemoryStream. Instead I use FileStreams in a dedicated temporary folder on that node. I have this wrapped in a StreamFactory class. I do associate the streams that are in-use with the UnitOfWork, so when that UntOfWork is disposed, I ensure that those FileStreams are 0'd out if I can, and that they are properly disposed of.

This StreamFactory code & strategy has been in place for years, and is used extensively throughout my application, so if this were the root cause of my issue, I would expect to be seeing similar issues in other features of my application. So I'm doubtful that this is it, but I also don't want to rule anything out.

I did some testing within my company yesterday with regards to concurrency, where we triggered ~10-15 of these requests all at once, and we did not observe any issues. So far this issue has only appeared in production. I have 2 non-production Azure-hosted environments plus I have my local laptop environment which I can expose to other users through ngrok.io, and we can't reproduce this issue anywhere else, which leads me further towards something environmental.

For what is actually being committed in that await uow.CompleteAsync(); statement, at the RDBMS level, I am inserting 1 new record into 1 table, and updating another record in another table with the ID (FK). (Basically - add a new document, and then tell the "request" what document represents the zipfile that was just generated). So the SQL workload should be extremely lightweight.

Recognizing my older versions, I'm looking at upgrading ABP from v4.10 to v4.21, but in the collective release notes, I'm not seeing anything that would affect this. I'm also looking at upgrading Hangfire from v1.7.27 to v1.7.35, but again I don't see anything that would affect this.

I had been running ABP v4.5 for a very long time, working on a plan to upgrade to v8.x "soon".

Earlier this year, I ran into a SQL connectionPool starvation issue in PROD, and determined that it was caused by using AsyncHelper.RunSync. Increasing the minThreadPool size resolved the immediate issue, and that's where I decided to upgrade from v4.5 to v4.10, as you did great work at that time to reduce the usage of AsyncHelper.RunSync.

I am going to continue to look at the health of our Azure SQL database, to see if we have anything interfering that may cause the UnitOfWork to hang. I am also going to continue to look at ConnectionTimeout, CommandTimeout, and TransactionTimeout settings, as well as my Hangfire configuration. Honestly I don't mind if the zipfile creation fails. I can implement retry logic here for resillience. What bothers me is that the Transaction seems to hang indefinitely, where Hangfire thinks the running job has been orphaned, and queues another instance of the same job and this hanging job locks/blocks other instances of the same job.

Thanks again for the reply. Let me know if anything else comes to mind.

-Brian

Hi @rickfrankel ,

I realize that I'm very late in responding to this topic, but I was curious if your solution using the ABP RedisOnlineClientStore was working for you.

While I was working on the initial AzureTablesOnlineClientStore implementation, I had also written my own RedisOnlineClientStore implementation but ran into challenges with Network I/O and concurrent request load in my Azure Redis cache, which is why I decided to move towards Azure Tables instead. Have you found any network I/O or load issues connecting to your Redis cache using the RedisOnlineClientStore ?

I do recognize that in my AzureTablesOnlineClientStore implementation, I did not add a timestamp or any mechanism to clean out the table for old or stale connections.

If the ABP RedisOnlineClientStore implementation works for you, that's awesome. I'll have to look into switching to that store instead.

Cheers and Happy Coding! -Brian

Good afternoon,

I'm on an older version of ABP (v4.10), and while I am working on upgrading to a newer version, I'm running into a new and rather challenging issue.

I have been using Hangfire for my background jobs for years now, and only very recently, I have 1 job in particular that seems to be getting stuck.

The background job is extremely simple. It takes a list of files that a user has selected, packages them up into a zipfile, and then sends a user a link to download the zipfile and sends the user who submitted the request a notification that the zipfile was sent.

In an effort to troubleshoot the issue and ensure I don't send emails with the zipfile being constructed, I am explicitly beginning and completing UoW using UnitOfWorkManager

Here is my code (logging removed for readability)

public class BuildZipfileBackgroundJob : AsyncBackgroundJob<BuildZipfileArgs>, ITransientDependency

{

// ... properties & constructor removed to condense the code...

protected override async Task ExecuteAsync(BuildZipfileArgs args)

{

if (args.CreateNewPackage)

{

using (var uow = _unitOfWorkManager.Begin())

{

using (_unitOfWorkManager.Current.SetTenantId(args.TenantId))

{

using (AbpSession.Use(args.TenantId, null))

{

await _zipFileManager.BuildZipFileAsync(args.RequestId);

await uow.CompleteAsync();

}

}

}

}

if (args.Send)

{

using (var uow = _unitOfWorkManager.Begin())

{

using (_unitOfWorkManager.Current.SetTenantId(args.TenantId))

{

using (AbpSession.Use(args.TenantId, null))

{

await _zipFileManager.SendZipFileAsync(args.RequestId);

await uow.CompleteAsync();

}

}

}

}

}

}

As I've been trying to troubleshoot what is happening, I have added Logger statements, and what I am seeing is that the first await uow.CompleteAsync() is never completing. My logging stops and I never see any more logging after that for this job.

Additionally, if other Users queue up more requests for this background job, the issue continues to group, and more Hangfire jobs become clogged.

I have read some about the Configuration.UnitOfWork.Timeout setting, and right now I'm not changing that value in my startup configuration.

What I'm seeing is that the job hangs for hours, so Hangfire thinks the job is an orphaned job, and queues another instance of the same job, which further compounds the issue. Ultimately, Hangfire queues up the same job ~5-6x which then causes problems with Hangfire polling it's JobQueue table, and that breaks the entire Hangfire queue processing, leaving jobs enqueued but never processing.

What I'm struggling with is why is await uow.CompleteAsync() getting stuck and never completing. It seems like there is a transaction lock or deadlock that could be causing the problem, but I've been really struggling to figure out the root cause (and resolution).

Given my version of ABP (v4.10.0), I'm thinking perhaps it's the use of AsyncHelper. Since my class inherits from AsyncBackgroundJob, the job execution is:

public override void Execute(TArgs args)

{

AsyncHelper.RunSync(() => ExecuteAsync(args));

}

I don't think I have a way around this, and I have many other background jobs that inherit from AsyncBackgroundJob that do not have this problem.

I've thought about using UnitOfWorkOptions when calling .Begin(), and adjusting the Timeout and the Scope attributes, but I feel like I'm just swatting blindly.

My environment is deployed in Azure, using Azure Storage for files and Azure SQL for the RDBMS.

Any ideas or suggestions you can offer would be greatly appreciated! Thanks, -Brian

Good morning everyone,

A new issue has come up recently in my production environment that I'm struggling to understand. I have a LOT of Hangfire background jobs, which have been running very smoothly for a very long time.

In the last ~5-6 days, I have been experiencing an issue where 1 specific background job hangs and never completes.

This background job builds a zip file, stores it in blob storage, sends an email to the intended recipient, and then publishes a ABP notification to the internal user who initiated the job.

I see the zip file being created and I see the email being sent.

However, in the Hangfire dashboard, the background job never completes.

When I look at my applicaiton logs, it looks like my code reaches the call to INotificationPublisher PublishAsync, but never continues.

I am using Azure SQL for my RDBMS, and when I look at my SQL DB, and I look at the transaction locks on the AbpNotifications table, I see that a table lock exists:

SELECT * FROM sys.dm_tran_locks

WHERE resource_database_id = DB_ID()

AND resource_associated_entity_id = OBJECT_ID(N'dbo.AbpNotifications');

I'm not seeing any evidence of deadlocks in my Azure SQL database, so it's unclear to me what would be causing this to hang.

I'm also finding that this seems to be somewhat intermittent & inconsistent, where some instances of this job complete just fine, while others hang like this and never complete.

This seems to be specific to my Azure SQL database, and I will continue to investigate, but I was curious if anyone else has encountered this kind of behavior.

Thanks! -Brian

Thank you very much @ismcagdas

I will give this a try and let you know how it goes. -Brian

Hello! It's been a very long time since I've posted (or answered any question). Apologies for that.

I did have a question I was hoping that someone could help me with I have a feature in my application UI to "open in a new window" for a document viewer. The new window that opens doesn't need the header / footer / notifications / signalr / or any of the platform UI wireframe. It truly just needs to be this 1 component.

I feel like I should know how to do this, but I'm just drawing a blank.

My angular chops aren't where they should be I guess.

Has anyone else had this need and had a solution?

There are definitely some pieces that I'll need like Features, Permissions, Localized Text, etc...

my "Stand Alone" component still inherits from AppComponentBase and right now I'm just using CSS to hide the header, footer, and to maximize the body of the page to be full window.

In my browser's Network traffic, I still see a LOT of communications that I don't need, like websockets (signalr), Profile Picture, Notifications, Linked Users, etc, ...

Any recommendations on how to cut that out of the page load would be incredibly helpful

Thanks! -Brian

I'm sorry @ismcagdas. I misunderstood you.

I was working with a brand new AspNet Zero project. Yes - I will try creating a brand new empty Asp.Net Core Web project, add the Swashbuckle libraries to it and then see if I can get their Cli to work with that example.

I will follow-up and let you know it goes. -Brian