Prerequisites

Please answer the following questions before submitting an issue. YOU MAY DELETE THE PREREQUISITES SECTION.

- What is your product version? v9.3.0, upgrading from v6.9.1

- What is your product type (Angular or MVC)? Angular

- What is product framework type (.net framework or .net core)? .net core

If issue related with ABP Framework

- What is ABP Framework version? v5.14.0

I have a product that has been working on a dotnet core upgrade (2.2 to 3.1) since April 2021 (it's taken so long due to other feature development work, and not due to ANZ) Since August 2021, we have been running our /develop build of our product on ANZ v9.3.0 in our dev environment and it's ran great. In December 2021, we promoted our /develop build to our UAT environment, and again it ran great. Last weekend, we tried promoting to our PROD environment, and the system completely tanked.

We deploy on Azure AppServices, and we use TerraForm to govern all platform infrastructure configuration, so we know UAT and PROD were configured the same. We also deploy using Docker containers, so we know the code was the same. The only identfiable difference would be data, and we are looking at possible anomalies in PROD data that could have triggered this.

Our resource monitoring in Azure shows healthy performance for memory & cpu resources in our Azure AppServices, as well as CPU and Data I/O in our SQL resources. On our Azure AppService resource monitoring, we did not see hig request queuing or any other measurable metric available in Azure's metrics that could have identified a bottleneck or problem.

The issue we observed is extreme latency. For example the "AbpUserConfiguration/GetAll" endpoint took minutes to execute. Comparing to the current version of our product, it completes sub 200ms in PROD. The extreme latency seemed to affect all API endpoint requests.

As I didn't see any performance issues on our SQL server, I thought perhaps it was a caching issue in SettingsManager, but I confirmed that we were not configured to run Redis or any other distributed cache, so all caching would have been held in memory.

My other thought was could it have been a bottleneck somewhere in the request pipeline, perhaps in validating the JWT Token, but we observed the same latency issues hitting anonymous endpoints from Postman or other browsers in incognito mode, where no Authentication header would have been present in the request.

Lastly, I scanned our code to confirm that we aren't doing anything with sync locks or any other type of optimistic locking.

I realize that this is a broad post, and the ASNZ team isn't responsible for my Azure infrastructure.

I'm curious if anyone else has experienced anything like this? and if so, what your experiences were and possibly what other things we should examine or troubleshoot.

Thanks to the ASNZ community!

-Brian

26 Answer(s)

-

0

Hi @sedulen

Some of our customers faced similar problems but with different reasons.

- Do you use Redis in your app ?

- Could you share your Abp Nuget packages ?

- How many users are actively using the system ?

I can suggest you a solution depending on your answers.

Thanks,

-

0

Good morning @ismcagdas Thank you for the response.

1. Do you use Redis in your app ? We do support Redis, but when we encountered this issue, we did not have Redis configured.

In our

Startup.csclass, in theConfigureServicesmethod, we have added:bool.TryParse(_appConfiguration["Abp:RedisCache:Enabled"], out bool useRedis); var redisConnectionString = string.Empty; if (useRedis) { //get the Redis connectionString redisConnectionString = _appConfiguration.GetConnectionString(_appConfiguration["Abp:RedisCache:ConnectionString"]); if (String.IsNullOrEmpty(redisConnectionString)) { redisConnectionString = _appConfiguration["Abp:RedisCache:ConnectionString"]; } }This governs our Redis configuration at runtime, and I have confirmed that we have Abp__RedisCache__Enabled: false in our ENV Vars when we start our docker container.

2. Could you share your Abp Nuget packages ? Abp (5.14.0) Abp.EntityFrameworkCore.EFPlus (5.14.0) Abp.Web.Common (5.14.0) Abp.AutoMapper (5.14.0) Abp.Mailkit (5.14.0) Abp.Zero.Ldap (5.14.0) Abp.ZeroCore.IdentityServer4.EntityFrameworkCore (5.14.0) Abp.AspNetZeroCore (2.2.0) Abp.Zero.Common (5.14.0) Abp.Castle.Log4Net (5.14.0) Abp.AspNetCore (5.14.0) Abp.AspNetCore.SignalR (5.14.0) Abp.AspNetZeroCore.Web (2.2.0) Abp.Castle.Log4Net (5.14.0) Abp.Hangfire.AspNetCore (5.14.0) Abp.RedisCache (5.14.0) Abp.RedisCache.ProtoBuf (5.14.0) Abp.ZeroCore.IdentityServer4 (5.14.0) Abp.TestBase (5.14.0)

3. How many users are actively using the system ? We had observed this issue with ~30-40 concurrent active users in the system, spread across ~4 different tenants.

Thank you very much for the support. -Brian

-

0

@ismcagdas

As a follow-up question, what would the risks be with upgrading NuGet packages to v7.0.1 so that we are running the latest? if we do this, do we also need to upgrade our abp Angular packages as well, or can these be upgraded independently?

Thank you again, -Brian

Update : scratch that idea - while the Abp 7.0.1 packages don't have a direct dependency on .net6, almost all of the packages have a Transient dependency on another package that requires .net6.

-

0

good morning @ismcagdas.

I hope you had a wonderful weekend.

I wanted to reach out and see if you had any thoughts on my previous post.Thanks! -Brian

-

0

Hi @sedulen

Thanks :).

Upgrading to ABP 7.x doesn't force you to upgrade Angular packages, so you can just upgrade Abp pacakges. But, if you upgrade to Abp 7.x, you need to target .NET 6 because of

Abp.AspNetCorepackage, see https://github.com/aspnetboilerplate/aspnetboilerplate/blob/dev/src/Abp.AspNetCore/Abp.AspNetCore.csproj#L7 -

0

Thanks @ismcagdas,

Any thoughts on the original performance issue that was reported? I know you were looking at Redis.

Anything else you can think of to examine?-Brian

-

0

Hi @ismcagdas,

Just to give you an update, I am reviewing this github issue reported against EF Core 3. https://github.com/dotnet/efcore/issues/18022

We're looking to run some jMeter tests against some of our heavier endpoints to see if we can reproduce the same behavior in a test environment.

We have another "GetVersion" endpoint that is anonymously available, and that endpoint also saw significant latency.

So I'm wondering if we had a cascading effort. Slow SQL queries caused table locks. Table locks caused request queueing across multiple requests in the webserver. Ultimately resulting in very lightweight endpoints that don't even hit the database still performing very slowly.

I'll keep you posted as we investigate. -Brian

-

0

Hi @ismcagdas,

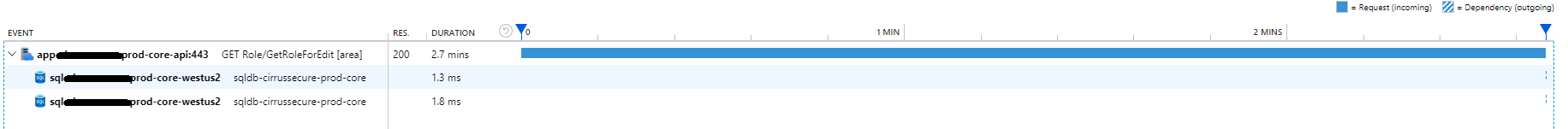

Digging deeper into our Azure telemetry from last week's performance issue, I'm also looking at this:

the code for that AppService method is simply: ` [AbpAuthorize(AppPermissions.Pages_Administration_Roles_Write)] public async Task

if (input.Id.HasValue) //Editing existing role? { var role = await _roleManager.GetRoleByIdAsync(input.Id.Value); grantedPermissions = (await _roleManager.GetGrantedPermissionsAsync(role)).ToArray(); roleEditDto = ObjectMapper.Map<RoleEditDto>(role); } else { roleEditDto = new RoleEditDto(); } return new GetRoleForEditOutput { Role = roleEditDto, Permissions = ObjectMapper.Map<List<FlatPermissionDto>>(permissions).OrderBy(p => p.DisplayName).ToList(), GrantedPermissionNames = grantedPermissions.Select(p => p.Name).ToList() }; }`

and I have confirmed that code matches the default ANZ v9.3.0 code for the RoleAppService

that telemtry chart does give me enough inside into the actual request processing, so I'm downloading the raw logs from our log aggregator.

If I'm reading this correctly, the AppService method took 2.7 minutes to execute, the 2 SQL dependencies occur at the very end, with the second dependency being a "Commit" statement. So if the timing of this dependency graph is correct, either:

- it took the webserver 2.7 minutes to receive the request and hand it over to the instance of the AppService, either due to request queuing in the webserver, or something in our code pipeline / handlers OR

- that very first action of

var permissions = PermissionManager.GetAllPermissions();took 2.7 minutes to execute, which could possibly point back to a caching issue.

-

0

Keeping my progress posted here, I have confidently ruled out #2 above. Digging into the raw logs from our syslogger (shameless plug for PaperTrail I LOVE PaperTrail as a syslogger / aggregator. I use it on ALL of my projects, and it's trivial to wire up into ANZ & Log4Net (as well as SeriLog and other logger frameworks)

2022-01-31 15:39:22 Microsoft.AspNetCore.Hosting.Diagnostics - Request starting HTTP/1.1 GET http://app-<scrubbed>-prod-core-api.azurewebsites.net:443/api/services/app/Role/GetRoleForEdit?Id=52 2022-01-31 15:39:22 ft.AspNetCore.Routing.EndpointMiddleware - Executing endpoint '<scrubbed>.Authorization.Roles.RoleAppService.GetRoleForEdit (<scrubbed>.Application)' 2022-01-31 15:39:22 c.Infrastructure.ControllerActionInvoker - Route matched with {area = "app", action = "GetRoleForEdit", controller = "Role"}. Executing controller action with signature System.Threading.Tasks.Task`1[<scrubbed>.Authorization.Roles.Dto.GetRoleForEditOutput] GetRoleForEdit(Abp.Application.Services.Dto.NullableIdDto) on controller <scrubbed>.Authorization.Roles.RoleAppService (<scrubbed>.Application). 2022-01-31 15:42:02 c.Infrastructure.ControllerActionInvoker - Executing action method <scrubbed>.Authorization.Roles.RoleAppService.GetRoleForEdit (<scrubbed>.Application) - Validation state: Valid 2022-01-31 15:42:02 c.Infrastructure.ControllerActionInvoker - Executed action method <scrubbed>.Authorization.Roles.RoleAppService.GetRoleForEdit (<scrubbed>.Application), returned result Microsoft.AspNetCore.Mvc.ObjectResult in 254.0347ms. 2022-01-31 15:42:02 c.Infrastructure.ControllerActionInvoker - Executed action <scrubbed>.Authorization.Roles.RoleAppService.GetRoleForEdit (<scrubbed>.Application) in 160625.7653ms 2022-01-31 15:42:02 ft.AspNetCore.Routing.EndpointMiddleware - Executed endpoint '<scrubbed>.Authorization.Roles.RoleAppService.GetRoleForEdit (<scrubbed>.Application)' 2022-01-31 15:42:02 Microsoft.AspNetCore.Hosting.Diagnostics - Request finished in 160639.7103ms 200 application/json; charset=utf-8So from "Route matched" to "Executing action method", we see a 2 minute & 40 second lag. Once the action method is executed, it completes in 254ms, which is the code block above in

GetRoleForEdit.This also matches what is observed in the Azure Application Insights Telemtry dependency chart.

Unfortunately this leads me to Thread Starvation & ThreadPool Configuration.

Still working on determining root cause, but I'm getting somewhere.

-Brian

-

0

Hi @sedulen

- Did you upgrade to Abp NuGet packages to 7.x or at least 6.6.2 ?

- If you are using Redis, could you try integrating Per Requet Redis Cache, see https://aspnetboilerplate.com/Pages/Documents/PerRequestRedisCache

-

0

Thanks @ismcagdas,

I have not yet upgraded our Abp NuGet packages. That's on my list of things to do this week.

Regarding Redis, this issue occurs when we are running InMemory Caching, so Redis isn't a factor yet For our RedisCacheManager, we have a custom implementation that leverages a combination of InMemory Caching and Redis. I have not looked at your PerRequestRedisCache implementation yet. But I will take a look.

At the moment, I'm looking at "hot code paths", especially AuthorizationFilters or any other places where method execution is extremely high, to make sure it is performant.

I have a "Firewall" feature that lets tenant admins restrict access by IP ranges, which is implemented as an AsyncAuthorizationFilter. I use a ConcurrentDictionary to cache the firewall rules in-memory, but I believe I found a case where the ConcurrentDictionary is not locking correctly, resulting in many unwanted databases queries. I'm refactoring that to use Lazy, implementing as a LazyConcurrentDictionary (happy to share the code).

Lastly, I see 1 other code path I am revisiting. I have a custom FeatureChecker class, and from ABP v4.5.0 to v5.14.0, sync methods were added to the IFeatureChecker interface. It looks like our implementation of the sync methods may have been a little rushed, and there may be a threading-related bug in that code. That being said, I am curious that for the IFeatureChecker method, why sync methods were added to the interface. When I debug the IFeatureChecker.IsEnabled (sync) method, I see that called a number of times, instead of the IsEnabledAsync method. Any thoughts on that?

Thanks again for the help! -Brian

-

0

Hi,

We have made some enhancements on the Framework to remove usage of AsyncHelper class which causes performance problems on high load. Because of that, we also removed usage of Interception by defualt for Controllers and AppServices.

But, we haven't faced any specific problem on FeatureChecker. The problem we had was related to Redis, because some items like Permissions, Settings and Features are storedin cache. When you use Redis, those items are stored in Redis and in each HTTP request, the app is making many requests to Redis and this was causing a huge performance problem.

We recently made a new enhancement, you can also check it out, https://github.com/aspnetzero/aspnet-zero-core/issues/4141

-

0

Thanks @ismcagdas,

I've already leveraged the enhancement that you cited. We were talking with @ricavir on this thread: https://support.aspnetzero.com/QA/Questions/10772/Token-validation-very-long

When you say: "Because of that, we also removed usage of Interception by defualt for Controllers and AppServices."

Can you give me some more information around this? Is this in the latest ANZ / ABP version or is this something you are working on developing now?

I've been trying to us Ben.BlockingDetector https://github.com/benaadams/Ben.BlockingDetector https://www.nuget.org/packages/Ben.BlockingDetector

When I turn it on it seems to be extremely noise, and complains about a LOT of potential blocking issues. One issue it seems to identfy is NewtonSoft.Json's serialization not supporting

async, and a result it buffers serialization to the filestream.I'm deploying to Azure AppServices using Linux Containers, and I think there is a metrics limitation inside of AppServices for Linux where I cannot see the ThreadPool behavior. So I'm really struggling to see if I am suffering from ThreadPool Starvation.

When you get a chance, can you give me more information around what you've done with "Interception" ?

Thanks, -Brian

-

0

Hi @ismcagdas,

I have continued to try and troubleshoot this issue. I have rebuilt my docker image locally to include several dotnet tools. I have also wired up my docker image to report to Azure ApplicationInsights and to their Profiler. I have produced several dump files and net traces, and oddly what PerView and Visual Studio Parallel Stacks seem to indicate is that my bottlenecks are at synchronous function calls that are being executed within async function calls. This seems like it would make sense because calling those synchronous functions inside of async function calls would cause the Threads to wait. (am I understanding that correctly?)

What's most surprising to me is that the call stacks in the PerView that indicate synchronous calls are in:

- Microsoft.Extensions.Logging.Console

- Microsoft.ApplicationInsights

- System.IO.FileSystem.Watcher

I'm surprised to see that the Console logging is as bad as it is. I'm not surprised to see that ApplicationInsights and the Profiler could be impacting performance

For the FileSystem Watcher behavior, I have read of issues with NewtonSoft.JSON not supporting async serialization, and using a FileSystem Buffer to help with peformance. Any thoughts on moving from NewtonSoft.JSON to System.Text.Json for API serializaion & deseralization?

Lastly, I do see a LOT of time spent in Castle.Windsor, in their ProxyGenerator.CreateInterfaceProxyWithTarget method, which uses a

SynchronizedDictionaryto Get or Add the class that is being returned.Ultimately what I am seeing is a very shallow function taking over 11 seconds. The AppService function simply checks a setting, that should already be cached, for if a threshold policy is set, and returns false, because it's not). To note, the AppService that services this function has 24 constructor injected dependencies, some of which may have deeper dependencies.

Is it possible that the Castle Windows is impacting performance here due to the number of injected dependencies in this class? Could I be encountering what was discussed here: https://github.com/aspnetboilerplate/aspnetboilerplate/issues/5604

I'm also curious if you have any recommended production configuration settings for Logging and for monitoring services like ApplicationInsights.

Thanks for the continued help! -Brian

-

0

Hi @ismcagdas,

If you have time, I'm interested to know what the team did with "we also removed usage of Interception by default for Controllers and AppServices".

Thanks, -Brian

-

0

Hi,

You find the correct PR actually. We removed this with https://github.com/aspnetboilerplate/aspnetboilerplate/pull/6165 in ABP 6.4.

Also this PR in AspNet Zero repository implements async token validation which also helps the performance.

Using both changes in your project will help to increase the performance.

-

0

thanks @ismcagdas.

i will look at PR 6165 in a few minutes. ive already backported the async token validation implementation

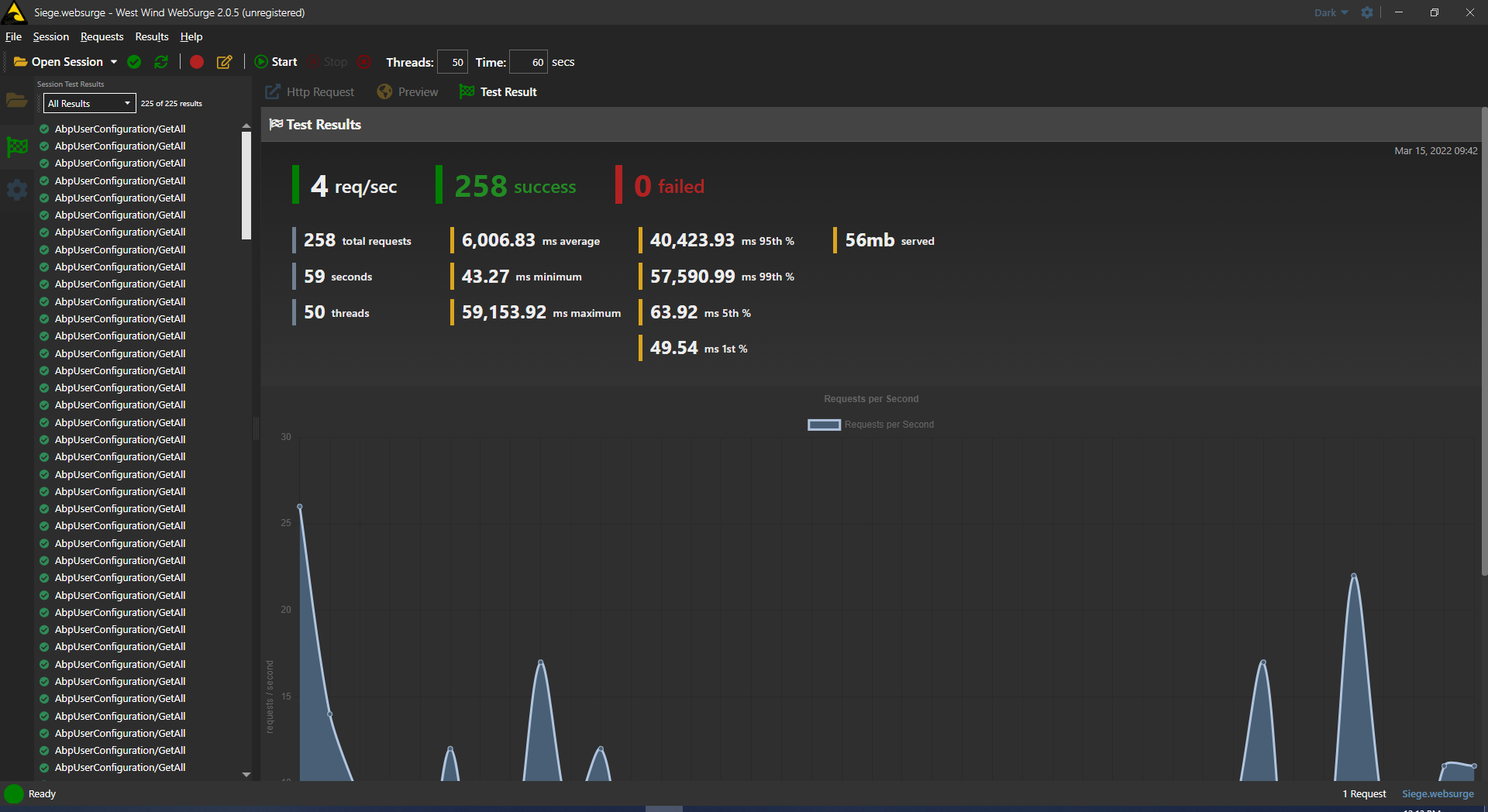

I have reduced my testing to just the /AbpUserConfiguration/GetAll endpoint, and I am simulating a load of 50 concurrent users to 10 seconds, using West Wind WebSurge (https://websurge.west-wind.com)

Unfortunately I'm seeing the throughput absolutely crippled.

i am comparing throughput between my old anz 6.9.0 stack my new anz 9.3.0 stack today

i'll keep you posted -Brian

-

0

We had similar issue, in the past, ANZ solution < 6 related to geographical distribution of UI/SQL/REDIS. We enabled REDIS and put REDIS/UI in same region to speed up the query and latency disappear.

I hope this can be usefull to find your issue

-

0

thank you very much @andmattia .

We're using Redis as well, deployed to Azure using Private Endpoints and Private DNS Zones to keep network traffic private with vNet integration. And yes, I 100% agree on keeping Redis in the same region.

Prior to ANZ's PerRequestRedisCache implementation, we'd leveraged work that another developer had posted about a combined Redis + InMemory Caching implementation, to avoid heavy network overhead to Redis. So we have a custom Cache implementation that has been working really well for us for a while now.

In my tests, I'm running everything locally (Visual Studio 2022 Community Edition, IISExpress) and West Wind WebSurge, and throwing a relatively light load at my app (~50 concurrent users for 60 seconds), all hitting the /AbpUserConfiguration/GetAll endpoint, I'm seeing the throughput absolutely crippled.

Over the last 2 days I've invested a lot of time learning

dotnet toolshttps://docs.microsoft.com/en-us/dotnet/core/diagnostics/#net-core-diagnostic-global-toolsspecifically using

dotnet-counters, I'm not seeing growth in the ThreadPool Queue Length, nor am I seeing growth in the ThreadPool Thread Count, which are typical indicators of bad threading, such asasync void,Task.Run, orDoAsync().GetAwaiter().Resultwhat I am seeing is that the

Monitor Lock Contention Countseems to spike periodically. Like into the thousands.I've been using

dotnet-dumpanddotnet-traceto try and capture stack dumps and traces to see what might be happening under the hood. I have also downloaded the source code for Abp, Castle, Castle.Windsor, and Log4Net, so that I can compile their code and load their .pdb files into my symbols when trying to debug a dump.I think I may have found something significant, but I've thought that 3-4 times already, so I'm trying not to get excited and jinx myself.

We'll see what the rest of the day brings, and I'll post another status update tomorrow morning. -Brian

-

0

you say that happens around 1000 calls... has you check on Iis configuration. I have a flash back that remwind me some parameters setee up to 1000 j( array or queue length).

which version of iis do you have?

so another tast could be start as console app if you can.

-

0

@andmattia

On my local I'm running Windows 10 and Visual Studio 2022 Community Edition. I'm unsure of the iisexpress version.

In my hosted Azure environments, we aren't using IIS. We're running on linux docker containers, so the webserver would be Kestrel.

The reference to 1000 earlier was to highligh the

Monitor Lock Contention Countmetric in thedotnet-countersdiagnostics tool. Whatever behavior is crippling my app seems to be coming in bursts. So it locks something, which seems to block pretty much everything, and then it releases. Probably a pretty typical locking behavior under a load. -

0

Hi @sedulen

I have made a suggestion in another post but I will write here as well. I think using Per Request Redis Cache (If you enable Redis) and using latest changes for async token validation from v11.1 will make a difference in our case.

-

0

Thank you@ismcagdas,

Does running the latest changes require an upgrade to .net core 6?

i have already backported the async token validation.

i have a tocket open with Microsoft for my Azure subscription as well so I'll keep you informed with anything they find

-Brian

-

0

Hi,

For "Per Request Redis Cache" and "Conventional interceptors" you will need .NET 5. For most of the other changes, it should be fine.

-

0

Hi @ismcagdas ,

Just to keep everyone informed, I tried to upgrade our production environment again this weekend, and I think we have run into issues once again and we will be forced to rollback.

At this point I think we can isolate our issue to the EF Core 2 to EF Core 3 breaking changes, specifically the "Cartesean Explosion" https://github.com/dotnet/efcore/issues/18022#issuecomment-542397085 https://docs.microsoft.com/en-us/ef/core/what-is-new/ef-core-3.x/breaking-changes#eager-loading-single-query

I am looking at our code now, and specifically considering the effort required to upgrade just our dotnet libraries to dotnet core 6 and the latest ABP / ANZ dotnet code.

I will let you know how it goes. -Brian